GAN reading 1

GAN reading 1:

https://machinelearningmastery.com/practical-guide-to-gan-failure-modes/

part 1: training a stable GAN

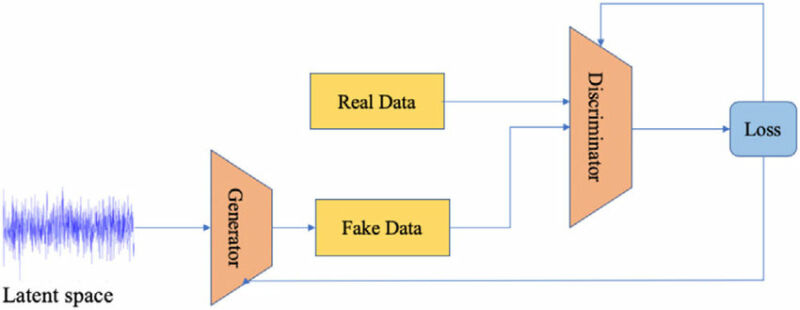

Training procedure:

- use the real image to train the discriminator

- generate the fake image and use the fake image to train the discriminator

- first, generate latent points (random vector/tensor)

- second, give latent points to the generator to generate the fake image

- third, use the fake image to train the discriminator

- generate latent points and labels

- use latent points and labels to train the GAN model and get the loss

- in the GAN model training process, discriminator training is disabled; so only the generator is trained

- the output from the GAN model (Generator + Discriminator(training disabled)) is the GAN loss

- GAN model performance is evaluated and saved

- generate latent points and give them to the generator to generate the fake image

- the fake image is saved for evaluation and GAN model weights are saved

A stable GAN will have a discriminator loss around 0.5, typically between 0.5 and maybe as high as 0.7 or 0.8. The generator loss is typically higher and may hover around 1.0, 1.5, 2.0, or even higher.

The accuracy of the discriminator on both real and generated (fake) images will not be 50%, but should typically hover around 70% to 80%.

The quality generated can and does vary across the run, even after the training process becomes stable.

* what is latent space?

Reading: https://towardsdatascience.com/understanding-latent-space-in-machine-learning-de5a7c687d8d

part 2: identifying a mode collapse in GAN

With a GAN generator model, a mode failure means that the vast number of points in the input latent space (e.g. hypersphere of 100 dimensions in many cases) result in one or a small subset of generated images.

(* “mode” refers to an output distribution.)

signs of mode collapse:

- generated images showing a low diversity

- loss plot for generator (and probably the discriminator) showing oscillations over time

ways to solve:

- restrict the size of the latent dimensions directly (forcing the model to only generate a small subset of plausible outputs)

- check if the data size is too small

part 3: identifying convergence failure in GAN

In the case of a GAN, a failure to converge refers to not finding an equilibrium between the discriminator and the generator. The likely way that you will identify this type of failure is that the loss for the discriminator has gone to zero or close to zero (The loss for the generator is expected to either decrease to zero or continually decrease during training). This type of loss is most commonly caused by the generator outputting garbage images that the discriminator can easily identify.

ways to deal with convergence failure:

- changing one or both models to have insufficient capacity

- changing the Adam optimization algorithm to be too aggressive

- using very large or very small kernel sizes in the models

- or the data problem